Thing i learned migrating from Digital Ocean to AWS Lightsail

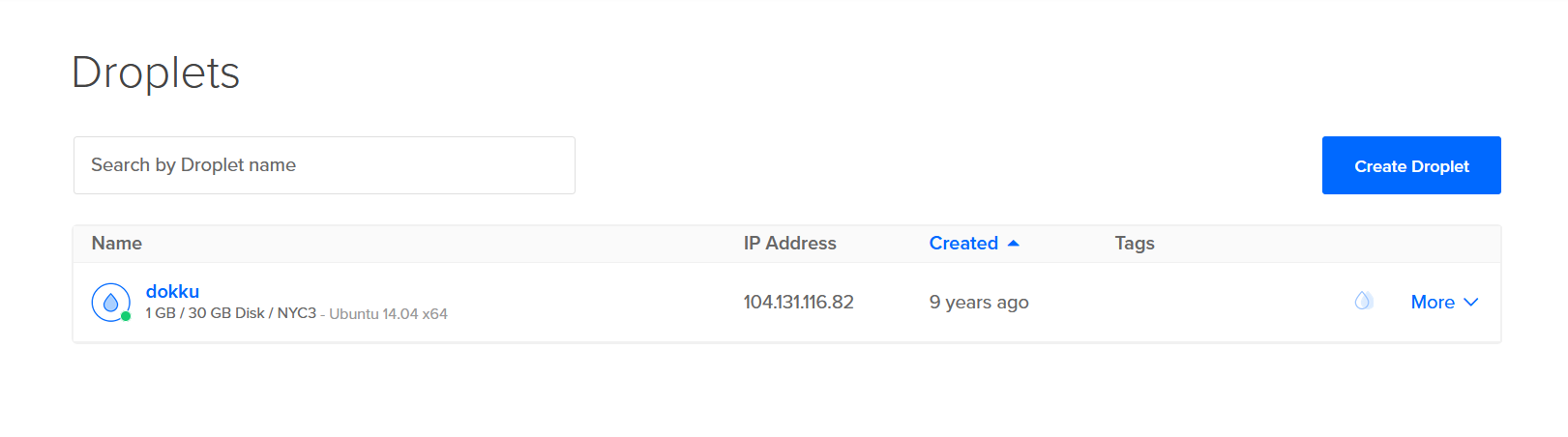

Its been 9 years since I spin up my Digital Ocean instance. It has been hosting my personal site, blog, random personal projects, and even faced one or two Hacker news front page traffic spikes.

Why named dokku you wonder, oh young reader? Well, back before kubernetes was a thing, and right when docker was starting to become popular, a tool came up that promised an easy way to maintain your own Platform as a Service, dokku. When I set up my machine I planned to use dokku for every project. Reality showed me that deploying to dokku was just too much effort when I owned the whole infrastructure ( a single instance).

That actually meant, my tiny Digital Ocean became a dumpster fire, full of random folders I did not know what they were doing, different database engines installed (and their daemons still kicking) and random broken python virtual environments.

Additionally, since my domain ended up becoming semi popular for a while, it was somehow added to botlists of domains. So it has been constantly under attack of bots, bringing down some of the services I personally use. Due to my incompetence when I set up the instance, things like https/cloudflare were not an option.

So my plan was to migrate to a new instance. In particular, I wanted to use AWS Lightsail, mostly because thats the cloud platform im most comfortable with and it opened the door to potentially more complex projects.

It's been a while since I had time to nerd out and work on some side projects (having kids is the ultimate time sink). But after postponing the migration for a while, I finally killed my trusty DO instance today.

Here are some random thoughts I wrote while I was painstainkingly migrating the services and hopefully, setting them up on a more structured way.

- https is hard! not extremely hard, but i could see how some less technical folks have a hard time getting it setup. Letsencrypt seems to be the only free way to spin up certificates currently, and there are a few magical commands that need to be run in order to set up certificates the right way. Related to this:

- You need to enable 443 for https on light sail, I found no mention of this when googling 'lightsail setup https'. I understand most people that use lightsail just want a prepackaged wordpress, but that is not always the case.

- certbot autodefault nginx settings do not work if you use a custom subdomain (my blog is hosted at blog.manugarri.com). certbot is awesome nonetheless.

- I use Ghost as my blogging platform. ghost blog is tremendously unhelpful when you want to migrate content. I tried importing the content from the old blog and i just got the message:

"Please install Ghost 1.0, import the file and then update your blog to the latest Ghost version.\nVisit https://ghost.org/docs/update/ or ask for help in our https://forum.ghost.org."IncorrectUsageError: Detected unsupported file structure.

which i understand, but i fail to see how hard it would be to keep backwards compatibility for what is basically a simple json structure like this:

{

"id": 2,

"uuid": "de30db4d-fdde-48b8-8548-fd3c9804cfb0",

"title": "How to easily set up Subdomain routing in Nginx",

"slug": "how-to-easily-set-up-subdomain-routing-in-nginx",

"markdown": "ARTICLE MARKDOWN HERE",

"image": null,

"featured": 0,

"page": 0,

"status": "published",

"language": "en_US",

"meta_title": null,

"meta_description": null,

"author_id": 1,

"created_at": "2014-09-30 00:42:27",

"created_by": 1,

"updated_at": "2014-09-30 03:21:56",

"updated_by": 1,

"published_at": "2014-09-30 00:42:27",

"published_by": 1,

"visibility": "public",

"mobiledoc": null,

"amp": null

}like, which killer feature was so extremely awesome yet so critically different that it forced people go to the hoops of spinning back an old ghost instance, fight with the updates, then dump then migrate? the content is the same for fucks sake.

I had to build my own shitty script to take a valid (empty) dump from my new blog instance, then make the old dump compatible by adding the missing fields:

import json

from copy import deepcopy

from itertools import islice

def batched(iterable, chunk_size):

iterator = iter(iterable)

while chunk := tuple(islice(iterator, chunk_size)):

yield chunk

valid_file = "manugarris-blog.ghost.2023-05-27-15-13-43.pretty.json"

posts_file = "manuel-garridos-blog.ghost.2023-05-27.pretty.json"

with open(valid_file) as fname: valid_file_data = json.load(fname)

with open(posts_file) as fname: valid_posts_data = json.load(fname)

posts = valid_posts_data["db"][0]["data"]["posts"]

n_posts_per_batch = 10

i = 0

for posts_batch in batched(posts, n_posts_per_batch):

posts_authors = [

{

"id": post["id"],

"post_id": post["id"],

"author_id": "1",

"sort_order": 0

}

for post in posts_batch

]

valid_file_data["db"][0]["data"]["posts"] = posts_batch

valid_file_data["db"][0]["data"]["posts_authors"] = posts_authors

with open(f"batch_dump.{i}.json", "w") as fname:

print(f"batch_dump.{i}.json")

json.dump(valid_file_data, fname)Any way, the migration took 3 saturdays, so it wasnt the end of the world. Im amazed that I have been able to run so many side projects/sites/blogs on a 5USD/month instance for 9 years without updating it. Having my own machine allowed me to grow significantly as an engineer, and the cost was totally worth it.